7 Key Pitfalls To Avoid In MedTech Clinical Data Collection

.png)

Clinical data collection is the process in medical research and patient care that involves gathering and analyzing data generated from patient care activities and clinical trials. In the MedTech industry, the collection of clinical data plays a critical role in regulatory compliance, product development, and market access.

With 10 years of experience in the MedTech field, clinical data experts and co-founders of Greenlight Guru Clinical, Páll Jóhannesson and Jon Bergsteinsson have noticed how time after time medical device companies are tripping over the same pitfalls. The consequences are that data collection becomes expensive, time-consuming and complex.

Therefore, Páll Jóhannesson and Jon Bergsteinsson have decided to share their insights on the most common pitfalls they have encountered to date, and how device manufacturers can avoid them. This blog is a summary of their key insights put forward during the webinar. Watch it on-demand, here.

The current challenges for devices & diagnostics

Following are some of the most poignant challenges that come up for medical device & diagnostic companies that we have identified throughout the years.

1. MedTech clinical operations are different

Device studies are often small and require various data that is normally not collected around drugs, because the way you apply devices in clinical practice is often by someone interacting with them. Clinical data collection in device studies and clinical operations for devices is conducted around the whole life cycle of a device (from early stage to later stage for market approval, and post-market).

Data collection in a clinical context is gathered through various means in different projects. It’s not always about a clinical trial or study; clinical data can be gathered for medical devices in many different ways, and even by different individuals (healthcare providers, physicians, investigators, patients).

2. Changes in regulations and focus

When it comes to clinical data capture for medical devices & diagnostics, another challenge is the ever-changing focus from regulators on clinical data. New regulations in Europe (MDR, IVDR) combined with an increased focus on clinical data by the FDA, have impacted the amount and quality of clinical data needed not only for market access but also to keep the device on the market. Read more about MDR compliance in our practical guide for device manufacturers.

3. Updated standards

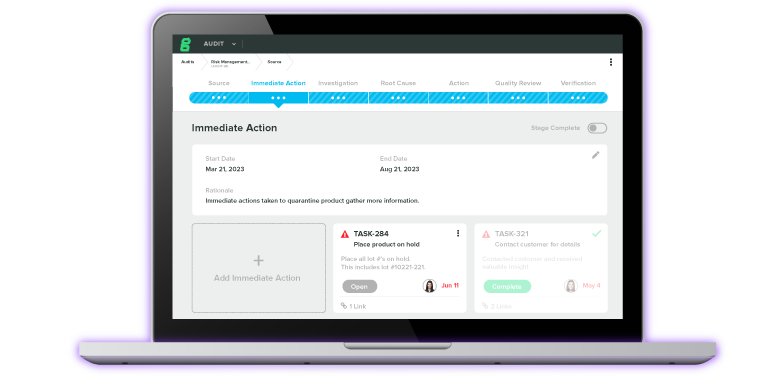

Standards that cover clinical practice in clinical investigations for medical devices have recently been updated. ISO 14155:2020 places increased requirements on clinical operations, both for pre- and post-market activities. So, if you are to collect any clinical information about your device/diagnostics tool in a post-market setting, you might need to use an electronic data capture solution to support your activities.

Due to these changes in standards and regulations, conducting studies solely on paper will not be good enough anymore. See how world-leading hearing aid manufacturer Oticon, switched from paper-based data collection and management to EDC software.

4. Value-based procurement

Another huge challenge for devices & diagnostics is the fact that buyers and procurement experts in the healthcare sector are placing much more focus on providing value-based care. This requires manufacturers to provide some documentation or justification on why one should select one device over another. To be able to produce the clinical data required to make the choice between one device over another is now crucial not only for market access but also for selling the product.

All in all, it is more and more apparent that medical devices & diagnostics need to collect an increased amount of data, and that’s where an electronic data capture platform comes in. Let us show you how it works!

The Problem with Traditional eClinical Solutions

Next, we are looking at why traditional eClinical solutions can be unfit to use to collect and manage clinical trial data for MedTech studies.

1. Data collection options

Electronic data capture has started to become more of a norm, in the medical devices space. However, the market is very much saturated with solutions that have been created for a different industry. Traditional electronic solutions that are designed to support and facilitate clinical operations are limited in that they’re designed for trials and clinical operations that run on an outdated standard, e.g., ‘’phase 1-4’’ trials for pharmaceuticals.

When it comes to devices & diagnostics, this particular setup in the clinical development stages of a device is not comparable. Devices & diagnostics go through a completely different clinical development phase than pharmaceuticals. This means that the solutions that are being offered are not as fitted and manufacturers risk unnecessarily complicating their data collection process even further, which often increases the resource requirements and decreases the clinical data quality.

2. Data formats

The data format standards that are often used in pharmaceuticals are also completely different than for devices. We can see this already with the FDA and the European authorities, who do not require data to be formatted in the same way as pharmaceuticals because it’s simply not as relevant. There is this unfounded myth in the industry that the FDA requires the same clinical data standard for submissions as in the case of pharmaceuticals. But this is not the case.

All this makes the point that you should not be selecting solutions that are designed for another industry.

3. Pricing, setup, maintenance

Most clinical software solutions nowadays are designed to function for larger pharmaceutical companies. 97% of the industry is comprised of small to medium enterprises within medical devices & diagnostics. These enterprises do not have the same budget, manpower, resources or even experience as big pharmaceuticals to be able to buy the right solutions and maintain them as expected. This puts an excessive strain on operations which may become very costly because these solutions simply require too many resources.

4. Non-existing compliance documentation

Many of these traditional solutions have been designed for different parts of the Life Science Industry and the documents that are often provided are not suited for medical devices. There are different documentation requirements set forth for device operations, e.g., ISO 14155. All this means is that you will have to do more work if you choose a solution that has not been adjusted to this specific requirement.

Let's look next at the 7 most common pitfalls for clinical data collection in MedTech studies.

7 Common Pitfalls in MedTech Clinical Data Collection

These pitfalls are items that we have identified throughout our 10 years of work with over 250 medical device studies, spanning all over the world. While working in the MedTech industry and within the clinical operations space, we kept encountering these same 7 pitfalls. So, we decided to share these pitfalls to help companies of all sizes learn to make better choices that result in better products.

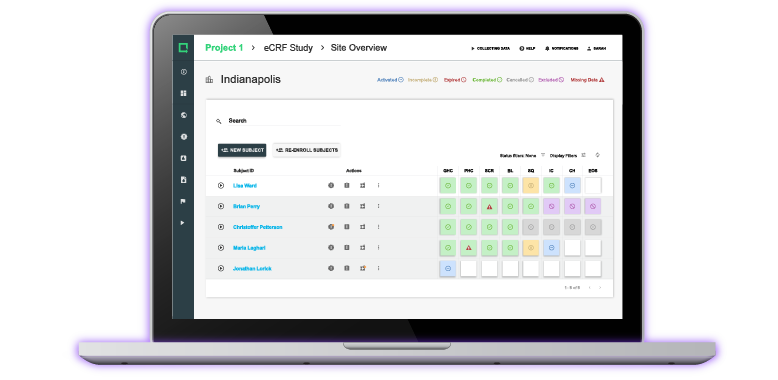

#1 Starting Off on Paper -> Go Digital

Instead of collecting clinical data on paper, we strongly recommend you go digital. Companies who choose the digital approach, collect and monitor data much more efficiently. They also save time and resources when it comes to complying with ISO 14155:2020. By using a digital solution, you can monitor your clinical data remotely, and can get an overview of what’s going on with your study in real-time.

In contrast, paper is not as reliable as it can get lost or damaged and it is a difficult medium to collaborate with. Obviously, there are more constraints to only using paper to collect data in a clinical setup. At some point in the process, all that data must be transferred to a computer, so you might as well start from the beginning and save yourself all that time.

#2 Collect too Much Data -> Start at the End

We have very often seen early-stage companies that are embarking on their first clinical study, but also larger companies with a massive budget, ending up collecting more data than needed. In doing so, they greatly increase the workload on clinical staff, e.g., clinical investigators, coordinators, nurses, etc., who might then feel more frustrated and less motivated. And of course, the greater the data set that you have, the more time you need to allocate.

So, the solution to this is to start at the end:

- Hypothesis

The most important thing when planning any clinical data collection project is to define your hypothesis. If you have not established a scientific hypothesis, you will have a hard time collecting the right data. On the other hand, if you have defined your hypothesis but you’re still caught up in collecting all data around it just try to minimize it to the exact requirements of the statistical analysis/plan and the report you want to generate from that.

- Statistical analysis plan

So, the second step is usually to define what exactly you want to present. What kind of statistics or graphs would you like your data to generate so that you can present it to an authority in Europe, to the FDA for review, or to anyone else who might look at your data?

- Data collection plan

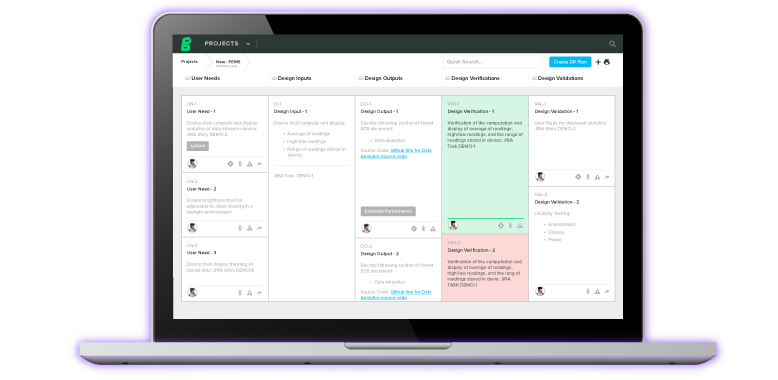

The next step would be to define your data collection plan. And this plan is not a document or a template that you can find and download online. It is simply a way to define how you want to collect your data, which can be done in many different ways.

We offer templates that you can use in Greenlight Guru Clinical and that are suited to help you exactly with this. In essence, this plan defines what questions you would like to ask to be able to create the reports you had decided to create, how to best formulate these questions, and how they would all look together accumulated in a digital setup (e.g., an electronic case report form, inside an EDC software).

- Data collection

The last step is to design your data collection activity. This is somewhat linked to your protocol design, where you define the number of visits or timepoints within an activity such as a clinical investigation. Here, you identify at what timepoints you want to collect, which parameters, using what methods, etc. What we have often seen is that people begin at this step.

After having collected their data, they try to go back to define a hypothesis that works. This approach is of course not scientific. Even though you might have defined something, we often see that protocols get adjusted and changed on the way because they were not designed like that.

There is this Danish publication, about clinical trial protocols that have been submitted to the ethics committee, which states that over 80% of all protocols submitted over the course of 10 years have been amended more than 3 times over the course of the study period. That is simply because they were not designed well enough. While you can challenge this and say ‘’you cannot know everything beforehand”, this practice is still very good to follow before you collect any data.

We can offer a real case example from a study that ran in Greenlight Guru Clinical a while back. Two weeks into the study they had enrolled numerous patients and their team at the site could not keep up with entering data because their design was not well thought out and they were collecting too much data. We ended up stopping the study and re-designing the whole thing to fix this.

#3 Forgetting the Individual -> Include PRO Data in Your Study

As device manufacturers, your focus is understandably on your device. You perform pre- and post-market activities where you study how your device is performing on safety and efficacy parameters. However, when you define how you want to run your study, you need to remember that at the center of it all is the individual. This individual wants to participate and give their data, and most importantly wants to have a better life or gain some benefits from using, or from being treated with your device. So, it is a good idea to include Patient Reported Data (PRO/ePRO) in your study.

There is greater importance from regulatory bodies and competent authorities on actually having PRO data on your device. Although this is being pushed much more these days, we still see too many companies that forget to include it. Patient Reported Outcome data is not very difficult to get as it is data that patients are usually very motivated to provide.

It can be very beneficial later on when you need to go to market or to document safety and performance aspects because patient data is very helpful in relation to your device. Learn more about how Greenlight Guru Clinical’s integrated ePRO makes PRO collection easy.

#4 Relying Too Much on KOLs -> Go Beyond Clinical Evidence

We are well aware that a lot of medical device companies do not have much clinical experience in-house and do rely on their key opinion leaders (KOLs) when designing studies. However, we must remember that it is not always about the clinical evidence. It is not always about just listening to the clinical investigators that recommend you the specific endpoints to focus on.

For example, if your device measures blood pressure, it is very important to measure the blood pressure itself because you will most likely compare it against the clinical standard. But you need to remember that putting a blood pressure measuring device on the market is not complex.

There are thousands and thousands of devices out there on the market and that might make it more difficult for you to make yours unique. This doesn’t relate only to equivalent or predicate devices, but it’s also relevant for novel technologies. That is because, again, the focus on clinical data is not only coming from regulators but it is also coming from the buyers.

So, thinking ahead and including a question about factors that can impact market access, health economics and other questions that could be asked during the clinical trial on the usability of the device, or feedback from staff on their experience, might be much more valuable than focusing only on clinical performance and outcomes.

Going back to pitfall #2, we must remember not to collect too much data. So, be careful because there is a thin line between too much data and too little data. While there is no rule of thumb to follow for when to stop and when you collected enough, the rule is to simply think a little further ahead and go beyond the clinical evidence.

Think about the market access strategies, sales, operations, procurements, and whether you can include minimal data collection parameters or outcomes in your study to support that strategy.

#5 Forgetting the Clinical Workflow -> Test, Test, and Test

When you are thinking about your study and how your device will be used in praxis, you need to remember that your study design will be impacted by how your device is used. In other words, try to design your study so that entering data or giving feedback on the performance of the device is thought of as part of the natural flow of using that device.

So, don’t try to put extra strain on the clinicians at the wrong timepoints or introduce extra steps that results in worse data. Also, keep in mind that not all the sites are the same. There are differences between one clinic and another in terms of what they are used to doing and their work process. So, try to include your end users as much as you can when you’re designing your study or planning how to do it. Otherwise, you might end up not getting the data you need.

And the solution to all this is to test, test, and test. We cannot stress the importance of testing your study with real users enough. Start testing studies internally with colleagues, try to test them with clinicians if they are involved in collecting the data, test with patients. Competent Authorities in Europe have started demanding that you involve patients when you define your eCRF (electronic Case Report Form), and that you involve patients every step of the way before you apply to the Ethics Committee.

While you are testing, you will realize where the hurdles are to getting the quality of data you need, and where you place too much strain on clinicians or patients in getting the data in. This way you can identify the risks and mitigate them by smoothing out the data collection plan or by ensuring you collect less data in this study and in a follow-up study you collect additional data. If you consider this thoroughly, it doesn’t have to be more expensive to do 2 separate studies instead of 1. It only requires correct planning and to make sure your resources are spent wisely.

There is this real case where a device study was to be initiated at one site that had been used as a test site. This went very well but when the study began, another site was added which had a different building structure. The surgery room was far away from the computers, and they didn’t allow any tablets or computers in there.

If anyone had to use Greenlight Guru Clinical, they would need to go out of the surgery room. There was also the issue that due to locks and cards not being accessible, they couldn’t randomize. So, the workflow clearly hadn’t been thought through well enough, and this example shows how these simple day-to-day things can make or break your study protocol.

#6 Mixing Data Collection Tools -> Define a Standard

Another pitfall that we see with companies is ‘’mixing data collection tools’’. This is probably one of the most prominent pitfalls we have encountered, because there are thousands upon thousands of software tools out there. In general, if people don’t know which ‘’correct’’ tool to use to solve their problem, they try to find some sort of solution themselves.

That is how people end up using Excel for one type of data, e.g., case reports, case series or even feedback from clinicians. We often see this happening inside a single company, where one colleague uses Excel, and another colleague uses a survey tool to gather clinical experience in a PMS setup. Or, another colleague who’s been running clinical operations doesn’t have enough funds so they’re running everything on paper.

First of all, mixing up tools in this way is highly discouraging because it often brings about chaos and extensive time used to try to mitigate issues and migrate data. The solution to this is to define a standard operating procedure (SOP) for data gathering (which can be part of your QMS) where you specify that a specific software tool should be used.

By doing this, you not only get a better overview, but you also bring much of that control back and avoid the chaos. This also introduces the possibility of improving the data quality in general, because clinical data in Excel vs paper vs survey tool differs from one solution to another.

By having a common tool where you gather all operations in one place, you have the oversight that executives, investors, or board members need. One of the worst things to experience is receiving an email before a board meeting in which you are asked to provide the latest clinical data you had collected. And if one type of data is stored in Excel, other on paper or OneDrive, other type in some other tool, etc., then you’re set up for a hard time.

Another advantage to having the data in one place is that it can enhance your regulatory compliance and it can simplify the QA parts of collecting data. While if you use multiple tools, you risk non-compliance as it is much harder to keep up with 3-4 solutions all the time. Schedule a free, non-binding demo of Greenlight Guru Clinical and see for yourself how easy it can be for your data collection and management process.

#7 Forgetting GCP & Validation -> Go with Compliance

Too often we have seen that because MedTech companies who want to save time or cut corners as they don’t necessarily have the resources to invest in state-of-the-art tools or software, end up not finding the right solution and forgetting about Good Clinical Practice (GCP) or validation. They tend to go too fast and submit data that has been collected in a non-validated platform, which gets rejected by notified bodies or competent authorities. This happens because they cannot document how the validation was made on the platform used to collect the data.

So, the solution to this is to go with compliance. When you are working with vendors ask them to document how they can assist you to comply with ISO 14155, FDA 21 CFR Part 11 and any other relevant regulatory requirements. If they cannot do that then it’s best to choose another vendor that can. Remember that a solution in itself cannot be compliant.

You can only measure compliance with, for example, ISO 14155 in the way that you work with a solution. Don’t get fooled by someone saying that the solution on its own is compliant. Make sure that you work in a compliant way with all your solutions and have the necessary documentation in place for inspection.

There are frameworks and guidelines both for Americas and Europe that tackle this specifically. These are designed to assist companies with producing the required documents that show that a software solution has been validated according to the requirements for GCP. It is better to be safe than sorry, and to require this documentation up-front instead of only relying on the vendor’s statements.

Greenlight Guru Clinical EDC software facilitates compliance with ISO 14155, FDA 21 CFR Part 11, by providing ready-to-use templates, and a standard operating procedure (SOP) template which can assist study stakeholders in using Greenlight Guru Clinical correctly. Contact us to find out how our EDC solution can help streamline your data collection efforts.

Greenlight Guru Clinical - the all-in-one eClinical solution

Ever since our inception, we have been focusing on assisting MedTech companies bring safer medical devices to market. With the increase of stringent regulations such as the EU MDR, our software solution is prepared to support the MedTech industry with pre and post-market methods/activities.

As the only eClinical solution made specifically for medical devices & diagnostics, Greenlight Guru Clinical is the right partner to accelerate your clinical trials and ensure EU MDR compliance. Our platform is pre-validated and comes with ready-to-use templates and SOPs to streamline your path to compliance.

Keep your clinical data in one place and reap the benefits such as less costs and resources to collect and manage your data. If you’re ready to learn how we can accelerate your go-to-market process or help you comply with EU MDR post-market surveillance requirements, book a personalized demo.

Páll Jóhannesson, M.Sc. in Medical Market Access, is the founder and Managing Director of Greenlight Guru Clinical (formerly SMART-TRIAL). Páll was previously the CEO of Greenlight Guru Clinical where he led the team to create the only EDC specifically made for medical devices.